Dimensionality Reduction Matrix Completion

It covers topics like Data processing Regression classification clustering Association Rule Learning Natural Language Processing Deep Learning Dimensionality Reduction etc. Ball tree and KD tree query times can be greatly influenced by data structure.

Enter The Matrix Factorization Uncovers Knowledge From Omics Names Affiliations Biorxiv

113 polynomial-time algorithm with strong performance guarantees under broad condi-tions3 The problem we study here can be considered an idealized version of Robust PCA in which we aim to recover a low-rank matrix L 0 from highly corrupted measure- ments M L 0 S 0Unlike the small noise term N 0 in classical PCA the entries in S.

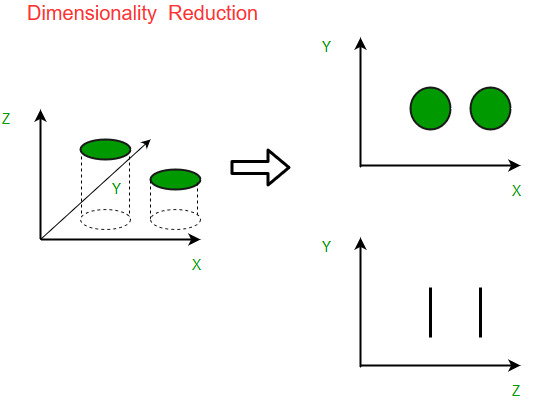

Dimensionality reduction matrix completion. Dimensionality Reduction This module introduces dimensionality reduction and Principal Component Analysis which are powerful techniques for big data imaging and pre-processing data. A localization method has been developed using weighted linear regression in. Topic extraction with Non-negative Matrix Factorization and Latent Dirichlet Allocation.

ITCS Matrix Completion and Related Problems via Strong Duality Full version on arXiv with Nina Balcan Yingyu Liang and Hongyang Zhang. GAfter completion of course will I receive the Diploma certificate from University of Hyd. Factor Analysis is a model of the measurement of a latent variable.

Even with relatively small eight dimensions our example text requires exponentially large memory space. Applied Machine Learning - Beginner to Professional course by Analytics Vidhya aims to provide you with everything you need to know to become a machine learning expert. Simple non-convex optimization algorithms are popular and effective in practice.

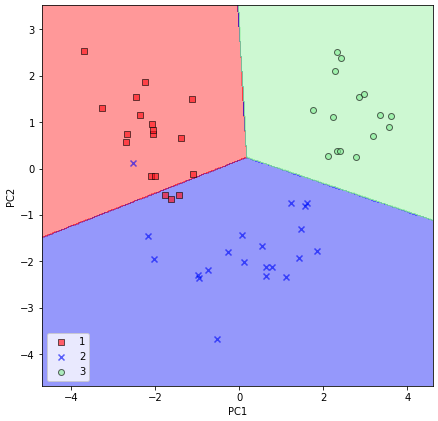

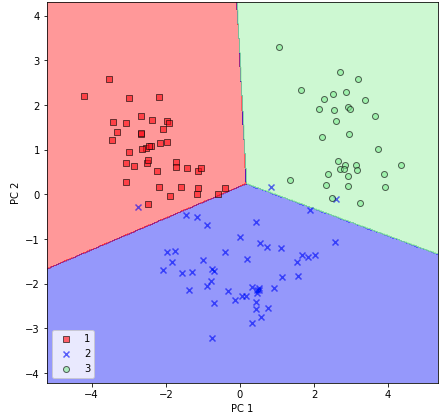

They use these relations to compare the list of top-N. Now Ill present Principal Component Analysis PCA. All code demos will be in Python so experience with it or another object-oriented programming language would be helpful for following along with the code examples.

We will cover methods to embed individual nodes as well as approaches to embed entire subgraphs and in doing so we will present a unified framework for NRL. Matrix Completion is the process of filling in the missing entries in a partially observed matrix. If you are comfortable dealing with quantitative information such as understanding charts and.

Selecting dimensionality reduction with. Popular dimensionality reduction algorithms are Principal Component Analysis and Factor Analysis. We will discuss classic matrix factorization-based methods random-walk based algorithms eg DeepWalk and node2vec as well as very recent advancements in graph neural networks.

To improve the accuracy of the localization this. At the end of this module you will have all the tools in your toolkit to highlight your Unsupervised Learning abilities in. Robust Principal Component Analysis.

It stems from the experiment that we have just conducted There was a flaw in our last experiment that I hope you might have caught. ICML Dimensionality Reduction for the Sum-of-Distances Metric to appear with Zhili Feng and Praneeth Kacham Selected for long talk. Familiarity with secondary school-level mathematics will make the class easier to follow.

In general sparser data with a smaller intrinsic dimensionality leads to faster query times. 316 papers with code. The data matrix may have no zero entries but the structure can still be sparse in this sense.

Matrix completion is a basic machine learning problem that has wide applications especially in collaborative filtering and recommender systems. Examples of these techniques include Dimensionality Reduction technique such as Singular Value Decomposition SVD Matrix Completion Technique Latent Semantic methods and Regression and Clustering. Topic models provide a useful method for dimensionality reduction and exploratory data analysis in large text.

Stoichiometry is founded on the law of conservation of mass where the total mass of the reactants equals the total mass of the products leading to the insight that the relations among quantities of reactants and products typically form a ratio of positive integers. We should have normalized our data first scaling all the values to be between 0 and 1. 1 papers with code Bayesian Inference Bayesian Inference.

Imagine we have a vocabulary of 50000. Principal Component Analysis creates one or more index variables from a larger set of measured variables. Stoichiometry ˌ s t ɔɪ k i ˈ ɒ m ɪ t r i is the calculation of reactants and products in chemical reactions in chemistry.

The Netflix problem is a common example of this. The course includes 405 hours on-demand video 19 Articles two supplemental resources and allows free access to mobile TV. We start with basics of machine learning and discuss several machine learning algorithms and their implementation as part of this course.

Dimensionality Reduction with PCA. Name a popular dimensionality reduction algorithm. 13 papers with code.

This step is important because. Matrix Completion Matrix Completion. 7 papers with code Online nonnegative CP decomposition.

Given a ratings-matrix in which each entry ij represents the rating of movie j by customer i if customer i has watched movie j and is otherwise missing we would like to predict the remaining. Most of the matrix is taken up by zeros so useful data becomes sparse. Model-based techniques analyze the user-item matrix to identify relations between items.

Brute force query time is unchanged by data structure. This approach identifies the outliers between the original data matrix and preprocessed data matrix. 82 papers with code Low-Rank Matrix Completion.

First issue is the curse of dimensionality which refers to all sorts of problems that arise with data in high dimensions. As this is a Diploma program you will be receiving the marks memo provisional degree certificate and final diploma certificate from the UoH which is widely recognized in the industry. The primary goal of the PCA is dimensionality reduction the low-dimension gives efficient and accurate results.

In this course you will learn a variety of matrix factorization and hybrid machine learning techniques for recommender systems. Face completion with a multi-output estimators. Starting with basic matrix factorization you will understand both the intuition and the practical details of building recommender systems based on reducing the dimensionality of the user-product preference space.

Introduction To Dimensionality Reduction Geeksforgeeks

How To Cross Validate Pca Clustering And Matrix Decomposition Models Its Neuronal

Accuracy Robustness And Scalability Of Dimensionality Reduction Methods For Single Cell Rnaseq Analysis Biorxiv

Low Rank Graph Optimization For Multi View Dimensionality Reduction

Everything You Did And Didn T Know About Pca Its Neuronal

Simple Matrix Factorization Example On The Movielens Dataset Using Pyspark By Soumya Ghosh Medium

How To Cross Validate Pca Clustering And Matrix Decomposition Models Its Neuronal

Tensor Decomposition For Dimension Reduction Cheng 2020 Wires Computational Statistics Wiley Online Library

How To Cross Validate Pca Clustering And Matrix Decomposition Models Its Neuronal

Principal Component Analysis For Dimensionality Reduction By Lorraine Li Towards Data Science

Principal Component Analysis For Dimensionality Reduction By Lorraine Li Towards Data Science

How To Cross Validate Pca Clustering And Matrix Decomposition Models Its Neuronal

Dimensionality Reduction Technique An Overview Sciencedirect Topics

Everything You Did And Didn T Know About Pca Its Neuronal

Dimensionality Reduction Techniques Python

Recommendation System Series Part 4 The 7 Variants Of Matrix Factorization For Collaborative Filtering By James Le Towards Data Science

Everything You Did And Didn T Know About Pca Its Neuronal

Everything You Did And Didn T Know About Pca Its Neuronal

How To Cross Validate Pca Clustering And Matrix Decomposition Models Its Neuronal