Matrix Multiplication Kernel Cuda

It ensures that extra threads do not do any work. One platform for doing so is NVIDIAs Compute Uni ed Device Architecture or CUDA.

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

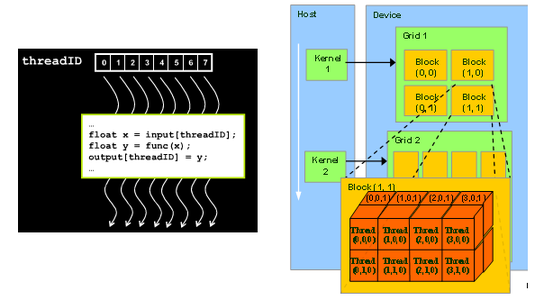

CUDA supports running thousands of threads on the GPU.

Matrix multiplication kernel cuda. OpenCV allocates device memory for them. X block_size_x threadIdx. My CUDA Kernel code for tiled matrix multiplication.

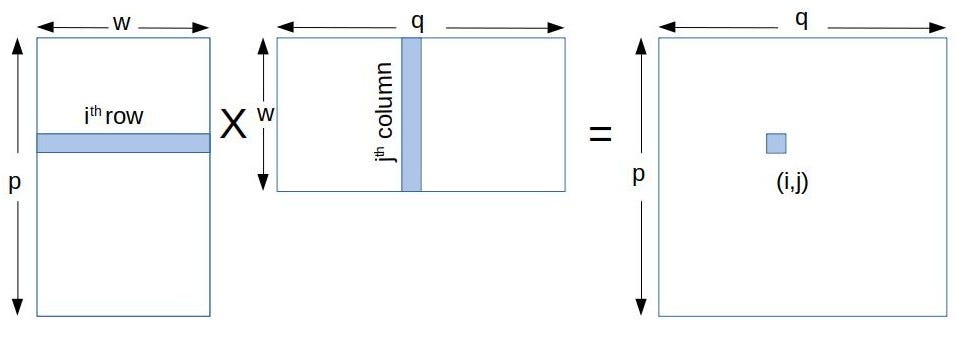

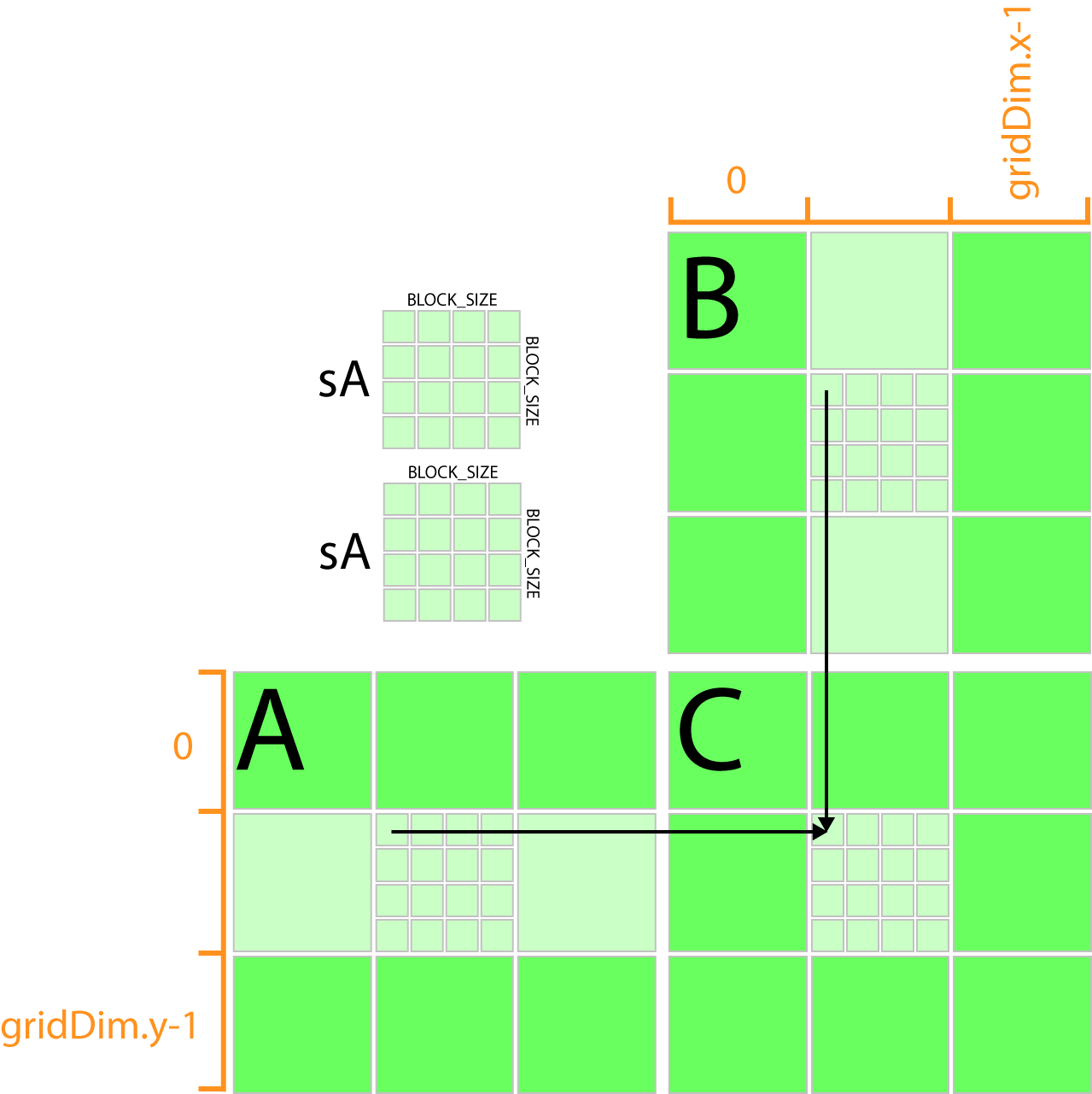

Int tx threadIdxx ty threadIdxy. A is an M -by- K matrix B is a K -by- N matrix and C is an M -by- N matrix. GPUProgramming with CUDA JSC 24.

However I can make partitions of x and At tranposed A easily and call the kernel how many times as neccesary. However APIs related to GpuMat are meant to be used in host code. __shared__ float Bshare TILE_WIDTH TILE_WIDTH.

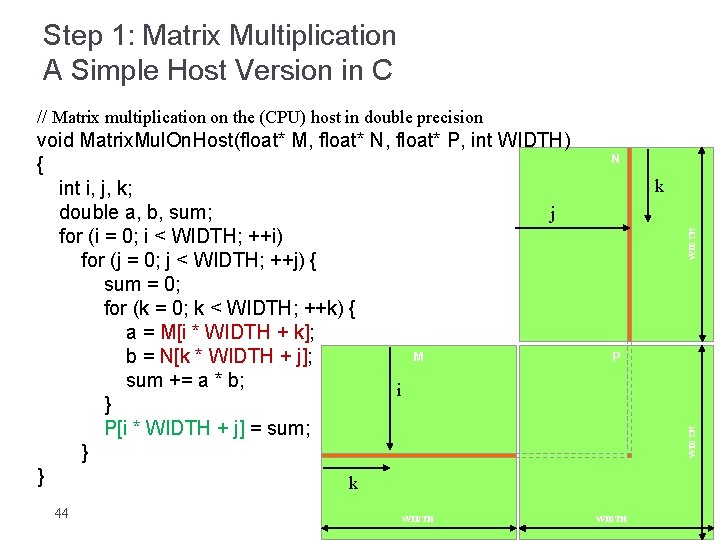

This new kernel is faster than the one equivalent to yours note that I dont count the time I spend tranposing the matrix but as you said the size of the input x is limited by the size of the shared memory. For simplicity let us assume scalars alphabeta1 in the following examples. Each thread has an ID that it uses to compute memory addresses and make control decisions.

GEMM computes C alpha A B beta C where A B and C are matrices. Efficient Matrix Multiplication on GPUs. Define TILE_WIDTH 16 __global__ void matrixMulKernel float A float B float C int width __shared__ float Ashare TILE_WIDTH TILE_WIDTH.

Int width gridDimx TILE_DIM. All threads run the same code. A task done for the unit GPU Architecture and Programming ENG722S2.

A CUDA kernel is executed by an array of CUDA threads. Lets start by looking at the matrix copy kernel. Y block_size_y threadIdx.

Int y blockIdxy TILE_DIM threadIdxy. I yi alphaxi yi Invoke serial SAXPY kernel. Float sum 00.

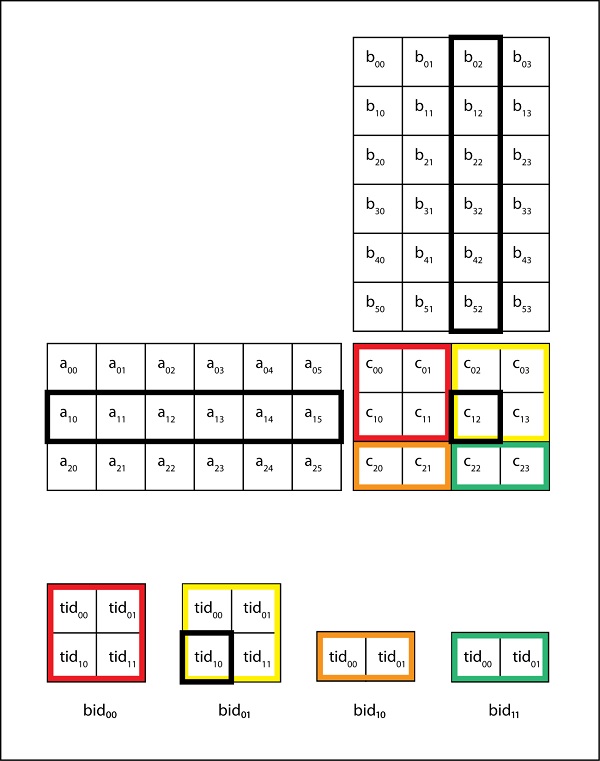

Implements tiled matrix multiplication in CUDA through two methods. Calculate the row and column for this element of the matrix. April 2017 Slide 2 Distribution of work Kernel function Each thread computes one element of the result matrix C n n threads will be needed Indexing of threads corresponds to 2d indexing.

Execute the following cell to write our naive matrix multiplication kernel to a file name matmul_naivecu by pressing shiftenter. The above condition is written in the kernel. __global__ void copyfloat odata const float idata int x blockIdxx TILE_DIM threadIdxx.

Matrix multiplication between a IxJ matrix d_M and JxK matrix d_N produces a matrix d_P with dimensions IxK. We use the example of Matrix Multiplication to introduce the basics of GPU computing in the CUDA environment. So you have to write your own kernel for your matrix multiplication.

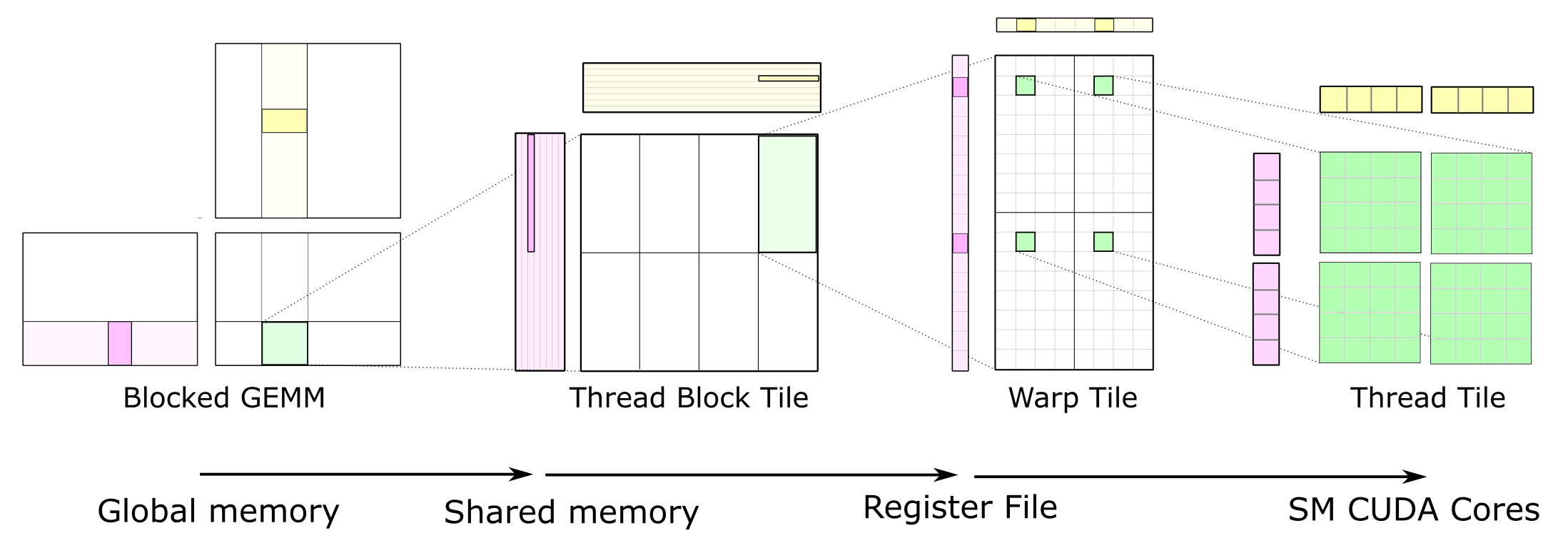

J BLOCK_ROWS odatayjwidth x. Each thread block consists of 256 threads and each thread computes an 8 x 8 block of the 128 x 128 submatrix. Each element in C matrix.

For int j 0. Matrix dimensions must be multiples of BLOCK_SIZE. Examples of Cuda code 1 The dot product 2 Matrixvector multiplication 3 Sparse matrix multiplication 4 Global reduction Computing y ax y with a Serial Loop void saxpy_serialint n float alpha float x float y forint i 0.

CUDA organizes thousands of threads into a hierarchy of a grid of thread blocks. The matmul kernel splits the output matrix into a grid of 128 x 128 submatrices each submatrix is assigned to a thread block. Int y blockIdx.

The formula used to calculate elements of d_P is. It is assumed that the student is familiar with C programming but no other background is assumed. For int k 0.

Matrix multiplication in CUDA this is a toy program for learning CUDA some functions are reusable for other purposes. Test results following tests were carried out on a Tesla M2075 card lzhengchunclus10 liu aout. Nvcc -o mult-matrixo -c mult-matrixcu Sample.

Obvious way to implement our parallel matrix multiplication in CUDA is to let each thread do a vector-vector multiplication ie. Cs355ghost01 1939 mult-matrix 1000 K 256 NN 1000000K 256 3906250000 --- use 3907 blocks Elasped time 43152 micro secs errors 0. Writefile matmul_naivecu define WIDTH 4096 __global__ void matmul_kernel float C float A float B int x blockIdx.

For matrix multiplication you have to write your own kernel. OpenCV provides a class called cvcudaGpuMat. Please type in m n and k.

Int bx blockIdxx by blockIdxy. Time elapsed on matrix multiplication of 1024x1024.

Github Kberkay Cuda Matrix Multiplication Matrix Multiplication On Gpu Using Shared Memory Considering Coalescing And Bank Conflicts

Matrix Multiplication Example A The Host Code Sets Up And Executes Download Scientific Diagram

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Cuda Reducing Global Memory Traffic Tutorialspoint

Matrix Multiplication In Cuda A Simple Guide By Charitha Saumya Analytics Vidhya Medium

Cuda Tiled Matrix Multiplication Explanation Stack Overflow

Matrices Multiplying Gives Wrong Results On Cuda Stack Overflow

Matrices Multiplying Gives Wrong Results On Cuda Stack Overflow

Tiled Matrix Multiplication Kernel It Shared Memory To Reduce Download Scientific Diagram

Partial Kernel Codes For Matrix Multiplication Cuda Keywords Are Bold Download Scientific Diagram

Partial Kernel Codes For Matrix Multiplication Cuda Keywords Are Bold Download Scientific Diagram

Cs Tech Era Tiled Matrix Multiplication Using Shared Memory In Cuda

Https Edoras Sdsu Edu Mthomas Sp17 605 Lectures Cuda Mat Mat Mult Pdf

Using The Cuda Programming Model Leveraging Gpus For

Running A Parallel Matrix Multiplication Program Using Cuda On Futuregrid

Cuda Memory Model 3d Game Engine Programming

Simple Matrix Multiplication In Cuda Youtube

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

2 Matrix Matrix Multiplication Using Cuda Download Scientific Diagram