Matrix Multiplication Performance Algorithm

This problem arises in various scientific applications such as in electronics robotics. The following naive algorithm implements C C A B.

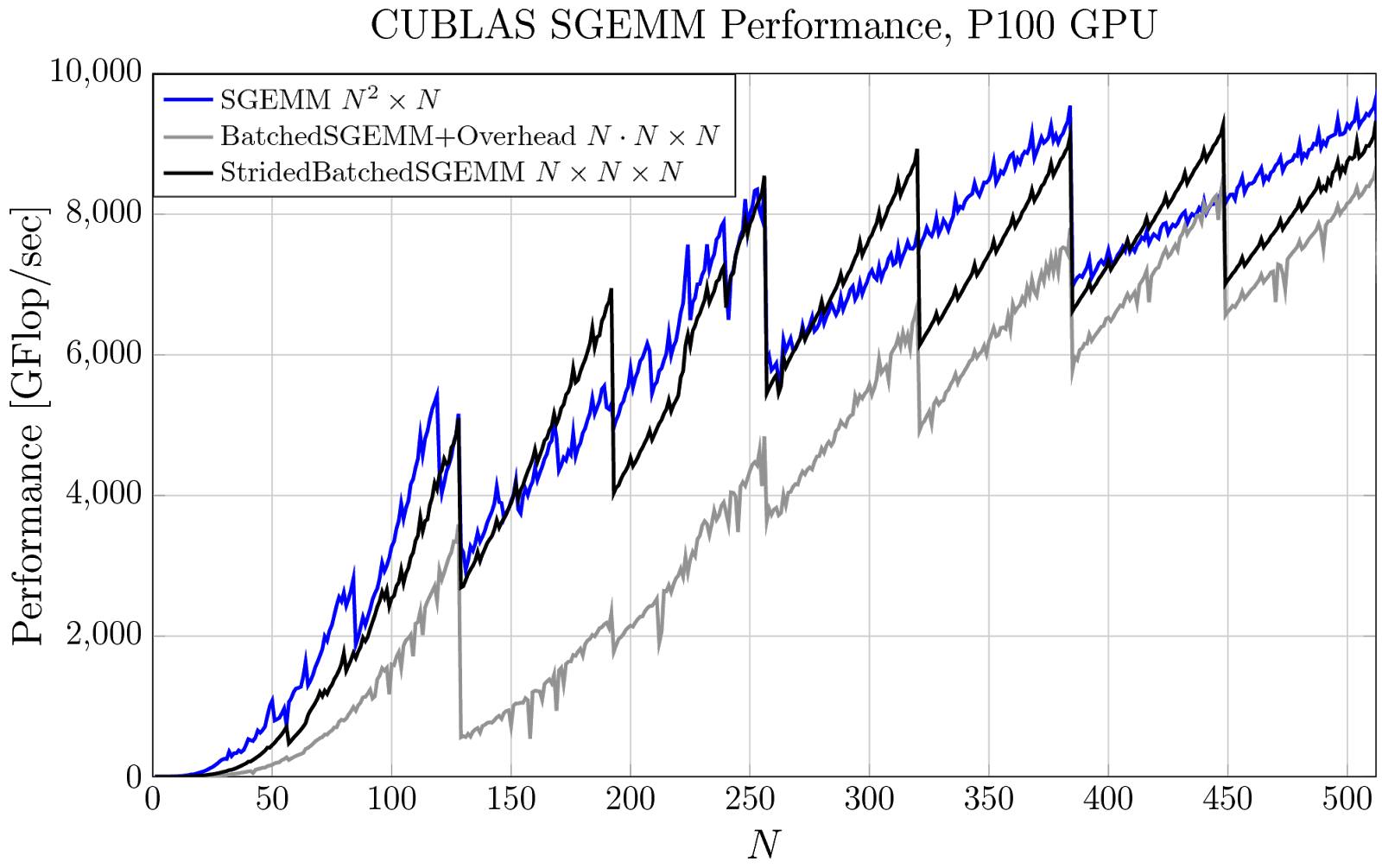

Pro Tip Cublas Strided Batched Matrix Multiply Nvidia Developer Blog

HIGH-PERFORMANCE MATRIX MULTIPLICATION 807 patterns space-filling curves 17 such as Morton order that possess locality in both dimensions have been proposed to store matrices.

Matrix multiplication performance algorithm. Frens and Wise 18 used a recursive matrix-multiplication algorithm in conjunction with a matching recursive array layout and demonstrated the beneficial effects of the. Cj ABj Cj for j 1NC C1C2C3 A B1B1B1 multiple panel-matrix multiplies. X X0 X1 XN1 0 B B B Xˇ 0 Xˇ 1.

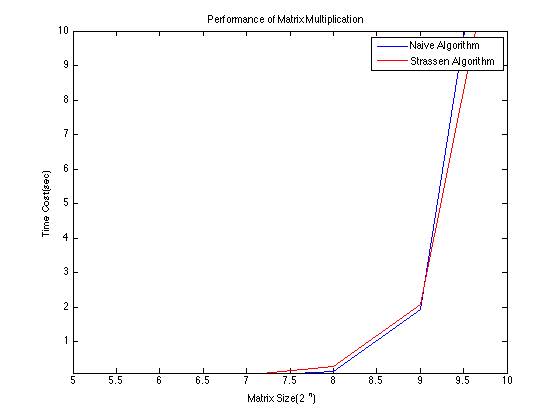

This is a performance test of matrix multiplication of square matrices from size 50 to size 1500. The results are an average calculated from 10 runs. Naive Matrix Multiplication Algorithm.

Unfortunately while simple this algorithm suffers from poor locality. Thus these algorithms provide better performance when used together with large multiple precision. The essence in the solutions of.

C i AiB Ci for i 1MC C 1 C 2 C 3 A 1 A 2 A 3 B multiple panel-panel multiplies C PN A p Ap B p C C A 1A2A3 B 1 B 2 B 3 22 A Cost Model for Hierarchical Memories. We obtain a new parallel algorithm that is based onStrassens fast matrix multiplication and minimizes communi-cation. For i 1 to n for j 1 to n for k 1 to n C ij C ij A ik B kj.

For k0k. We will show how to implement matrix multiplication CCAB on several of the communication networks discussed in the last lecture and develop performance models to predict how long they take. The development of high-performance matrix multiplication algorithms is important in the areas of graph theory three-dimensional graphics and digital signal processing.

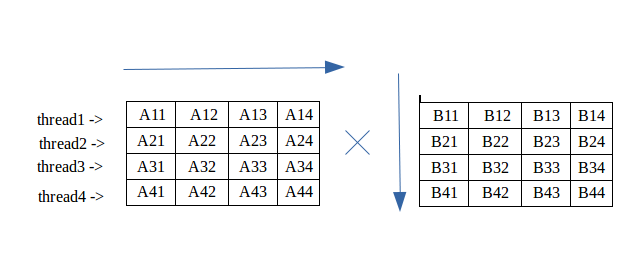

The Matrix multiplication algorithms are one of most popular solution used to show the functionalities of Parallel Algorithm so it became an important tool to solve hard problems in a reasonable time. A Family of High-Performance Matrix Multiplication Algorithms 53 multiple matrix-panel multiplies. This algorithm has been implemented on IBM POWERparallel TM SP2 TM systems up to 216 nodes and has yielded close to the peak performance of the machine.

As you can see the lines of the sequential and parallel functions cross two times. The algorithm outperforms all known parallel matrixmultiplication algorithms classical and Strassen-based bothasymptotically and in practice. The Chain Matrix Multiplication Problem CMMP is an optimization problem that helps to find the optimal way of parenthesization for Chain Matrix Multiplication CMM.

For given matrices A and B the Strassen and Winograd algorithms for matrix multiplication being categorized in the divide-and-conquer algorithms can reduce the number of additions and multiplications compared to the normal matrix multiplication particularly for large-sized matrices. The measurements are indicated by dots on the lines and the lines themselves are smoothed. Given an m n matrix X we will only consider partitionings of X into blocks of columns and blocks of rows.

The partitioning of matrices is fundamental to the description of matrix multipli-cation algorithms. Parallel matrix multiplication is one of the most studied fun-damental problems in distributed and high performance com-puting. Matrix-Matrix Multiplication on CPUs The following CPU algorithm for multiplying matrices ex-actly mimics computing the product by hand.

We present a quantitative comparison of the theoretical and empirical performance of key matrix multiplication. We will see that the performance depends on several factors. Matrix multiplication example Let A B and C be square matrices of order n n.

Example of Matrix multiplication. Elements of B are accessed columnwise and. The algorithm has been combined with Winog.

Xˇ M1 1 C C C A where Xj has nb columns and X ˇi has mb rows except for XN1 and XM1 which. A - matrix of dimensions nxm B - matrix of dimensions mxn C - resultant matrix C A x B for i 1 to n for j1 to m for k1 to n C ijC ij A ik B kj First let us see if we can do any SIMD Single Instruction Multiple Data type operations. For i0i.

Figure 153 shows a tiled algorithm that makes use of the MKL function for double-precision DP matrix multiplication cblas_dgemm although not all input parameters to cblas_dgemm are shown. The 3D parallel matrix multiplication approach has a factor P 16 less communication than the 2D parallel algorithms. Defining each call to a cblas_dgemm as the compute task there is a high task concurrency because there is no dependence between cblas_dgemm calls which compute different tiles of the C matrix.

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

How To Speed Up Matrix Multiplication In C Stack Overflow

Communication Costs Of Strassen S Matrix Multiplication February 2014 Communications Of The Acm

Tiled Algorithm An Overview Sciencedirect Topics

Tiled Algorithm An Overview Sciencedirect Topics

Numpy Matrix Multiplication Numpy V1 17 Manual Updated

Performance Critical A B Part Of The Gemm Using A Tiling Strategy A Download Scientific Diagram

Why Is Matrix Multiplication Faster With Numpy Than With Ctypes In Python Stack Overflow

Comparing Python Numpy Numba And C For Matrix Multiplication Stack Overflow

Strassen S Matrix Multiplication Algorithm

Pseudocode For Matrix Multiplication Download Scientific Diagram

Multiplication Of Matrix Using Threads Geeksforgeeks

Cs267 Notes For Lecture 9 Part 2 Feb 13 1996

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

Pdf Comparing Cpu And Gpu Implementations Of A Simple Matrix Multiplication Algorithm Semantic Scholar

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

Tiled Algorithm An Overview Sciencedirect Topics

Pseudocode For The Matrix Multiplication Algorithm Download Scientific Diagram

Cs267 Notes For Lecture 9 Part 2 Feb 13 1996