Matrix Multiplication Is Differentiable

This is enormously useful in applications as it makes it possible. As is the case in general for partial derivatives some formulae may extend under weaker analytic conditions than the existence of the derivative as approximating linear mapping.

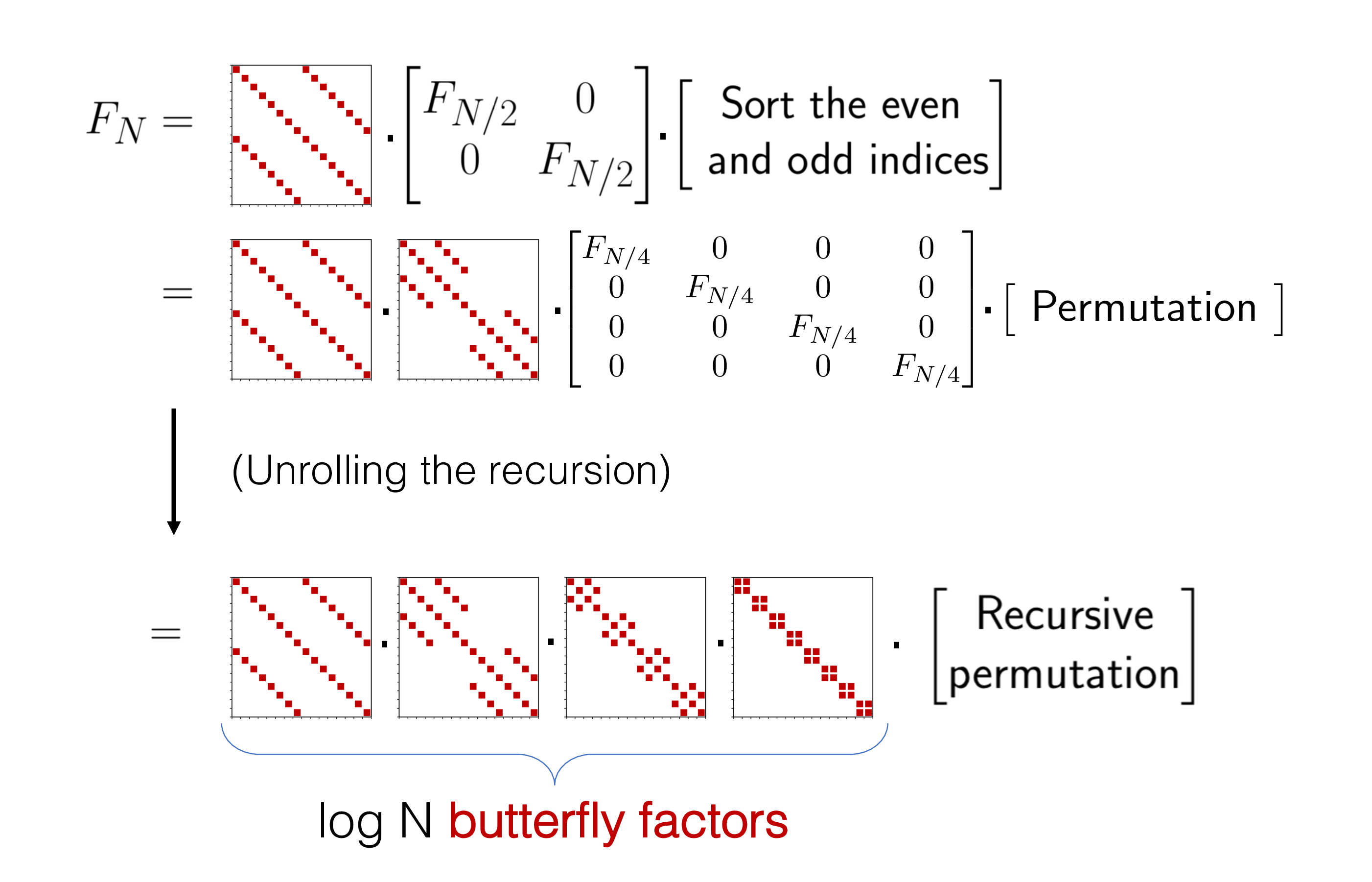

Butterflies Are All You Need A Universal Building Block For Structured Linear Maps Stanford Dawn

We have that AAT Xn i1 a ia T that is that the product of AAT is the sum of the outer products of the columns of A.

Matrix multiplication is differentiable. This is analogous to the fact that the exponential of a complex number is always nonzero. We will look at arithmetic involving matrices and vectors finding the inverse of a matrix computing the determinant of a matrix linearly dependentindependent vectors and converting systems of equations into matrix form. There are only four variables in the state and a network with two layers can solve the problem.

The exponential of a matrix is always an invertible matrix. The columns of a matrix A Rmn are a 1through an while the rows are given as vectors by aT throught aT m. Which is the matrix multiplication of and.

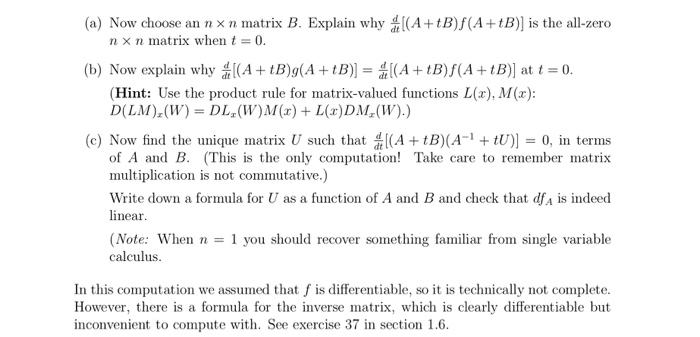

Those linear operators can ultimately be implemented by general matrix multiplication GEMM. The matrix exponential then gives us a map. Prove that matrix multiplication and inversion is differentiable.

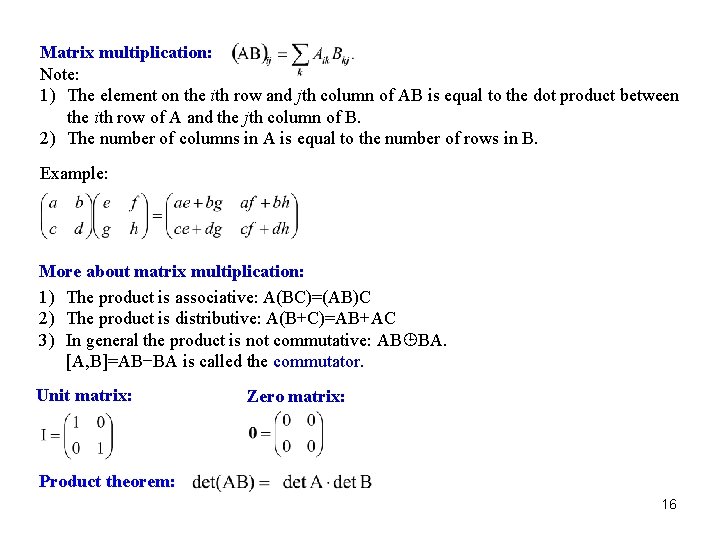

3 Matrix Multiplication De nition 3 Let A be m n and B be n p and let the product AB be C AB 3 then C is a m pmatrix with element ij given by c ij Xn k1 a ikb kj 4. In this section we will give a brief review of matrices and vectors. The multivariate chain rule states.

Neural Networks with General Matrix Multiplication Modern neural networks extensively adopt fully-connected layers and convolutional layers to achieve linear projection and feature extraction. For example a 2-dimensional K Kconvolution can be described. With such a simple problem and network maybe that isnt so bad.

The chain rule has a particularly elegant statement in terms of total derivatives. The inverse matrix of eX is given by eX. Terms of a diagonal matrix through a simple matrix multiplication formula.

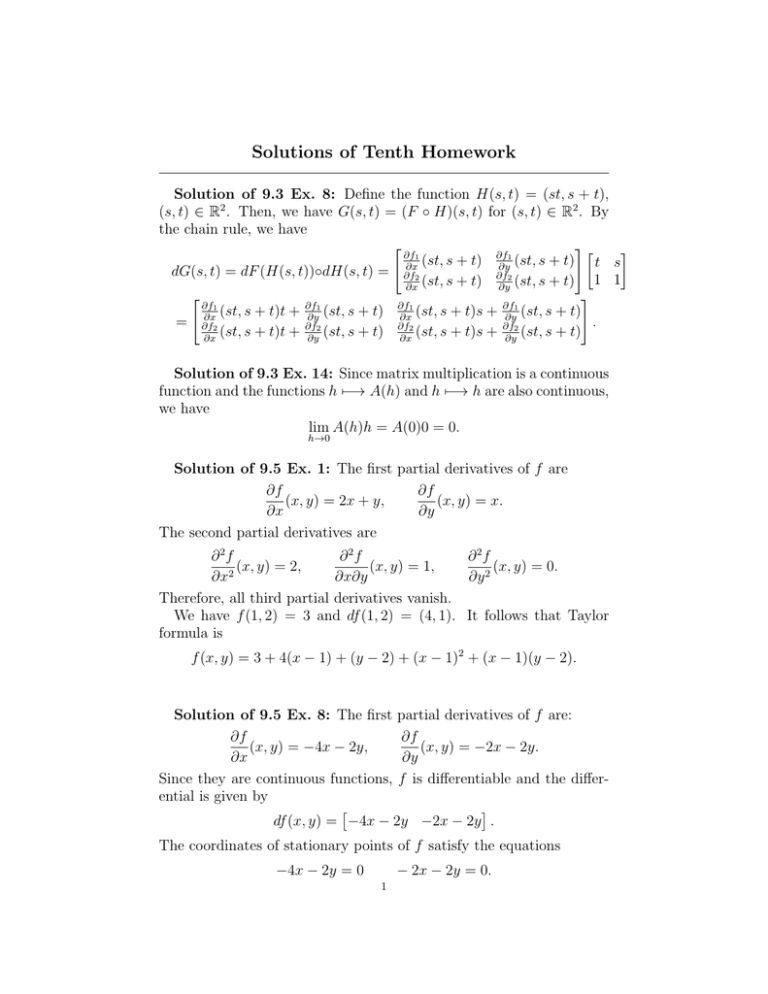

Prove that f and g are differentiable when considered as functions on open subsets of R n 2 and R n 2 R n 2. We begin by constructing a matrix P 2 1 1 1 from these eigenvectors. An identity matrix will be denoted by I and 0 will denote a null matrix.

G L n R G L n R be defined by f x x 1 and let g. In the case that a matrix function of a matrix is Fréchet differentiable the two derivatives will agree up to translation of notations. If f is differentiable at a then the derivative of f at a is the Jacobian matrix.

Given and and a point if g is differentiable at a and f is differentiable at then the composition is differentiable at a and its derivative is. A few things on notation which may not be very consistent actually. 2 Matrix multiplication First consider a matrix A Rnn.

Longer answer - You can view scalar division as multiplying by the reciprocal ie dividing a numbermatrix by a set number is the same as multiplying by 1number For example. Now look what happens when we calculate the matrix product P1AP. Short answer - yes Absolutely.

It says that for two functions and the total derivative of the composite at satisfies If the total derivatives of and are identified with their Jacobian matrices then the composite on the right-hand side is simply matrix multiplication. Perhaps the closest you could get is to draw out all of the different matrix multiplication operations that would need to take place and then try to trace through the math each time. G L n R G L n R G L n R be defined by g x y x y where we take the relative topologies from R n 2 and R n 2 R n 2 respectively.

For the most part the procedure during a standard convolution is identical to our method unfold im2col Chetlur et al2014Chetlur Woolley Vandermersch Cohen Tran Catanzaro and Shelhamer matrix-multiplication fold since only the matrix-multiplication part is replaced by the differentiable random fern implementation. As a simple demonstration take the matrix A 1 2 1 4 whose eigenvec-tors 2 1 and 1 1 have already been calculated.

Solved 7 In This Problem We Will Differentiate The Inver Chegg Com

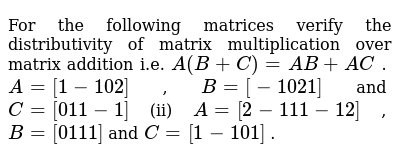

For The Following Matrices Verify The Distributivity Of Matrix

Solved 1 Let Mnxm C Denote The Set Of N M Matrices Wi Chegg Com

Last Comment On Elementary Matrices Left Multiplication Of A By A Sequence Of Invertible Elementary Matrices Carrying Out Elementary Row Operations Will Put A Into Any Row Equivalent Form Including Row Reduced Echelon Form R Thus Ea R Where E Is A

What Is A Functor Definitions And Examples Part 2

Not Understanding Derivative Of A Matrix Matrix Product Mathematics Stack Exchange

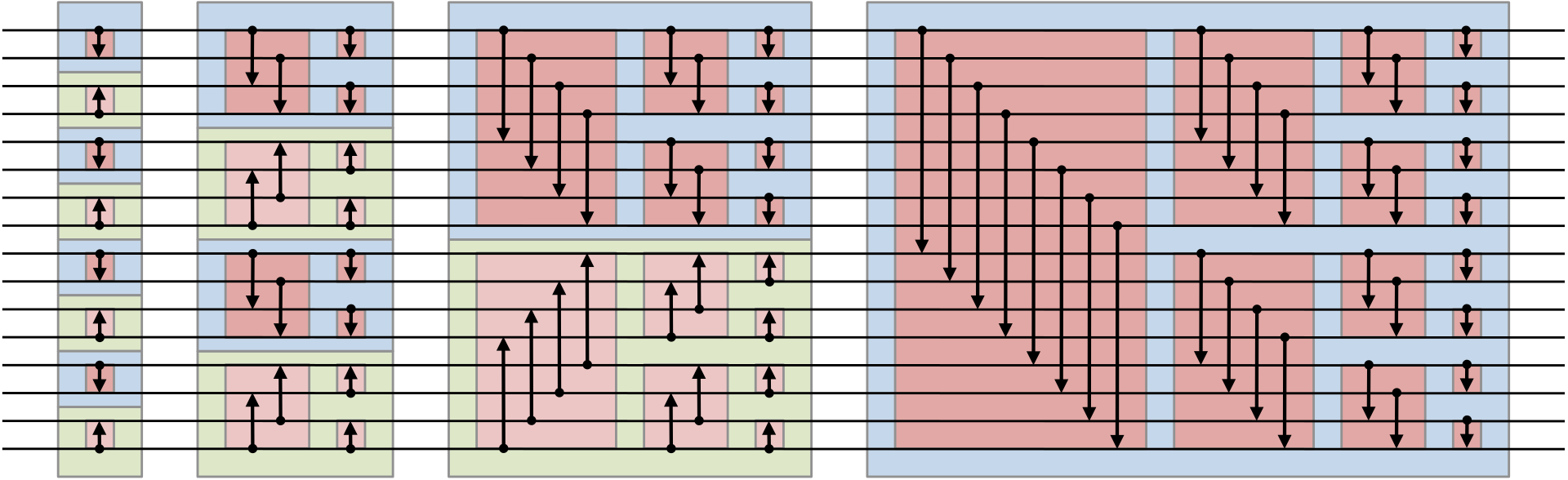

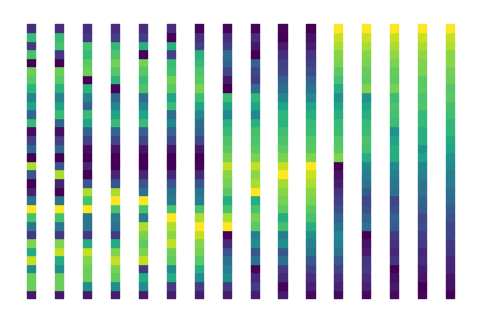

Differentiable Parallel Approximate Sorting Networks

Chapter 3 Linear Algebra February 26 Matrices 3

Can Anyone Explained How This Matrix Multiplication Become The Derivative Calculus

Differentiable Parallel Approximate Sorting Networks

Why The Gradient Of Log Det X Is X 1 And Where Did Trace Tr Go Mathematics Stack Exchange

For The Following Matrices Verify The Distributivity Of Matrix

Differentiable Parallel Approximate Sorting Networks

Chapter 3 Linear Algebra February 26 Matrices 3